Generalization, Data Quality, and Scale in Composite Bathymetric Data Processing for Automated Digital Nautical Cartography

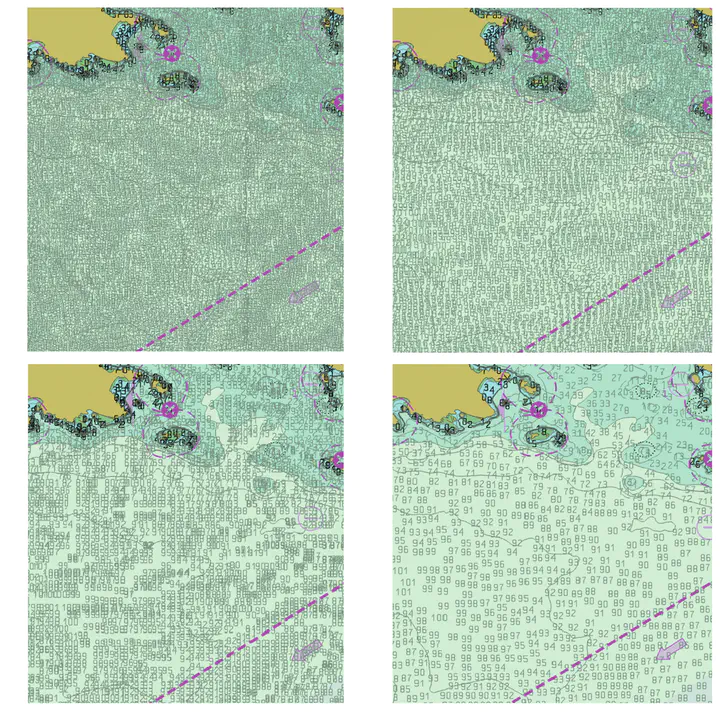

Contemporary bathymetric data collection techniques are capable of collecting sub-meter resolution data to ensure full seafloor-bottom coverage for safe navigation as well as to support other various scientific uses of the data. Moreover, bathymetry data are becoming increasingly available from growing hydrographic and topo-bathymetric surveying operations, advancements in satellite-derived bathymetry, and the adoption of crowd-sourced bathymetry. Datasets are compiled from these sources and used to update Electronic Navigational Charts (ENCs), the primary medium for visualizing the seafloor for navigation purposes, whose usage is mandatory on Safety Of Life At Sea (SOLAS) regulated vessels. However, these high-resolution data must be generalized for products at scale, an active research area in automated cartography. Algorithms that can provide consistent results while reducing production time and costs are increasingly valuable to organizations operating in time-sensitive environments. This is particularly the case in digital nautical cartography, where updates to bathymetry and locations of dangers to navigation need to be disseminated as quickly as possible. Therefore, our research focuses on developing cartographic constraint-based generalization algorithms operating on both Digital Surface Model (DSM) and Digital Cartographic Model (DCM) representations of multi-source composite bathymetric data to produce navigationally-ready datasets for use at scale. This research is conducted in collaboration with researchers at the Office of Coast Survey (OCS) of the National Oceanic & Atmospheric Administration (NOAA) and the University of New Hampshire Center for Coastal and Ocean Mapping Joint Hydrographic Center (CCOM/JHC).